Abstract

In recent years, Virtual Reality based platforms and technologies have come to play a key role in supporting training of medical residents and doctors in medicine including surgery. The focus of this paper is to discuss several platforms including haptic, Virtual Reality, Mixed Reality and distributed collaborative web based cyber training technologies. The design and role of these simulators in the context of two surgical procedures (LISS plating and Condylar plating surgery) is discussed for training application contexts. These surgical procedures are performed to treat the fractures of the femur bone. A comparison of these simulation technologies including emerging low cost platforms are discussed along with an overview of the impact of using such cyber based approaches on medical training.

Keywords

Virtual Reality (VR), Simulator, Haptic, Immersive, Cloud bases, Orthopedic surgery

Introduction

VR based surgical training environments have been developed for various surgical domains such as heart surgery [1, 2], laparoscopic surgery [3, 4] among others. There are a number of simulators available in the orthopedic surgery field; however, most of them have been built for arthroscopic surgical training. Only few researchers have focused on the design of VR based simulators for the complex fracture related surgical training [5, 6]. Orthopedic medical training is currently limited by use of traditional methods. Such methods involve training by observing an expert surgeon performing surgery, practicing on cadavers or animals, etc.

Developing VR based simulators for surgical training will help improve and supplement the traditional methods used to train the residents and medical students. There are several platforms and technologies available which can be used for creating VR based simulators for orthopedic surgical training. Each of the platforms have unique attributes which make them suitable for training residents in surgical training. In this paper, the focus is on the emerging as well as traditional platforms and technologies used in the creation of such simulators. The discussion is presented in the context of orthopedic surgical procedures including Condylar plating [7] and LISS (Less Invasive Stabilization System) plating surgery [8, 9]. Both these surgical procedures are performed by orthopedic surgeons to treat fractures of the femur bone. Four platforms and technologies discussed in this context are

- Haptic Platform

- Immersive Virtual Reality (VR) Platform

- Mixed Reality (MR) based Platform

- Distributed Web based Platform

Haptic based simulator platforms

Many researchers have created VR based simulators using haptic interfaces and technologies. For example, the design and validation of a bone drilling simulator was presented in [10]. In [11], a VR based simulator to improve the bone-sawing skills of residents using haptic designed and presented. Other researchers have utilized haptic technologies as well for arthroplasty, osteotomy and open reduction training in [12] and cardiac catheter navigation in [13].

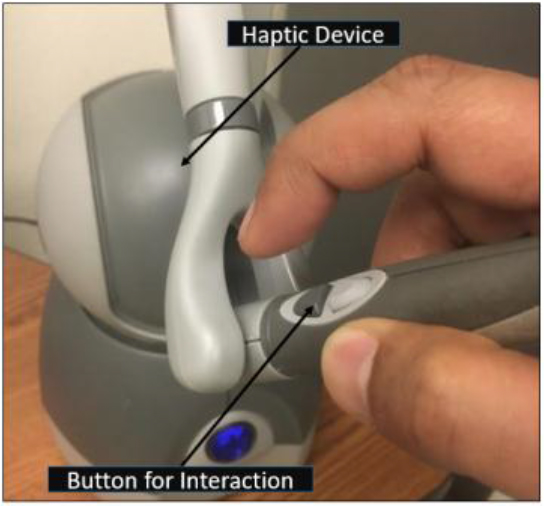

Haptic based simulators built for training medical residents in LISS plating surgery and Condylar plating surgery has been demonstrated to improve understanding of these surgical procedures [7, 8, 14, 41, 42]. These simulators have been developed using Geomagic Touch™ haptic device which provides a haptic interface allowing users to touch, grasp and interact with various surgical tools during the training activities. An intuitive ‘feel’ for various tasks during the training activities is provided through the haptic interface. Such tasks include picking up various plates or tools, placing them accurately in a certain location, drilling the bone, screwing the bolts etc. The haptic device consists of a stylus which is used to interact with the virtual objects. The stylus has with two buttons. A resident can pick up an instrument or other virtual object by pressing and holding the dark colored button (figure 1). The pressing of the button allows user to make a virtual contact with the target object. The haptic simulator was built using the Unity 3DTM simulation engine using C# and JavaScript. A haptic plugin was used to create support for the haptic based interface. The various virtual objects in the simulation environment such as bones, surgical implants such as Condylar plates and supports, were designed using SolidworksTM. A view of the haptic based LISS plating surgical environment has been shown in figure 2.

Figure 1. A haptic device used in surgical training

Figure 2. A view of one of LISS plating surgical training environments

Immersive VR (IVR) Simulator Platforms

Recently, immersive platforms such as ViveTM and Oculus RiftTM have been explored to design VR simulators for medical surgical training contexts [16–18]. These emerging platforms support immersive capabilities at a lower cost compared to traditional technologies such as the VR CAVETM and PowerWallTM. In [18], a comparison between non-immersive and immersive Vive laparoscopic simulator has been presented. In [19], residents were tested on scenarios such as appropriate completion of primary survey, responding to vital cues from the monitor and recognizing fatal situations in a fully immersive VR blunt thoracic trauma simulator. Test and survey were conducted to assess the simulator and the results suggested that it can be used as a viable platform for training.

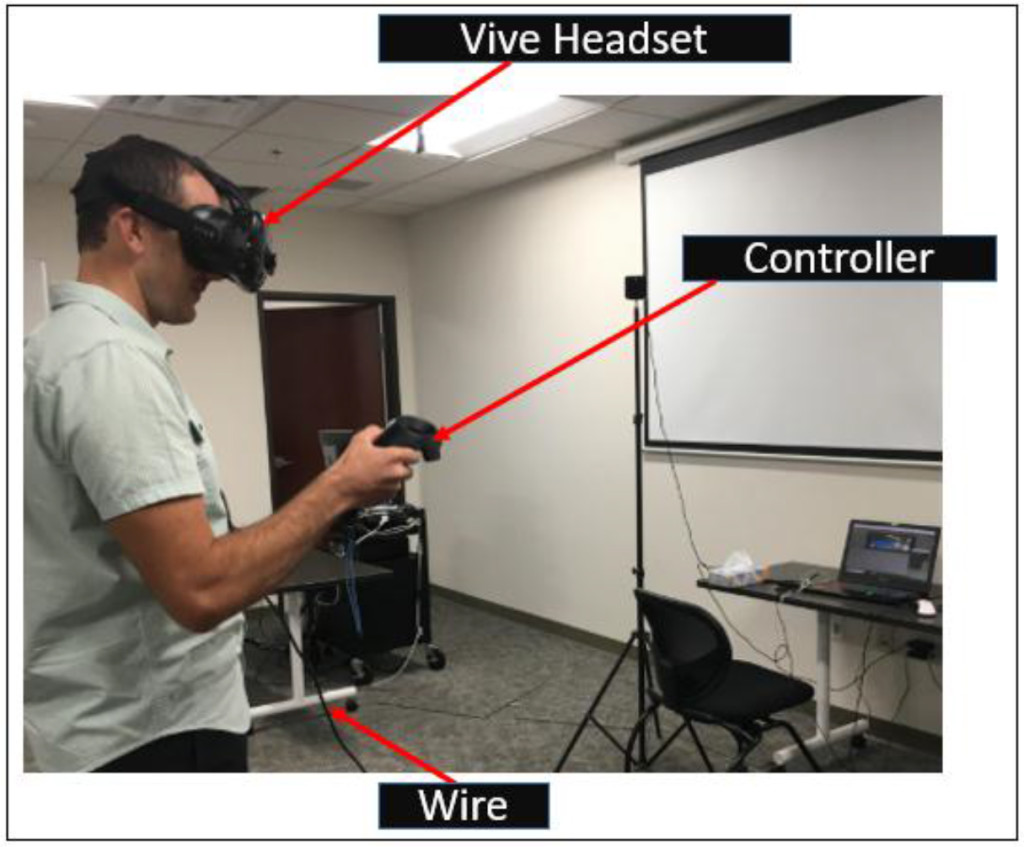

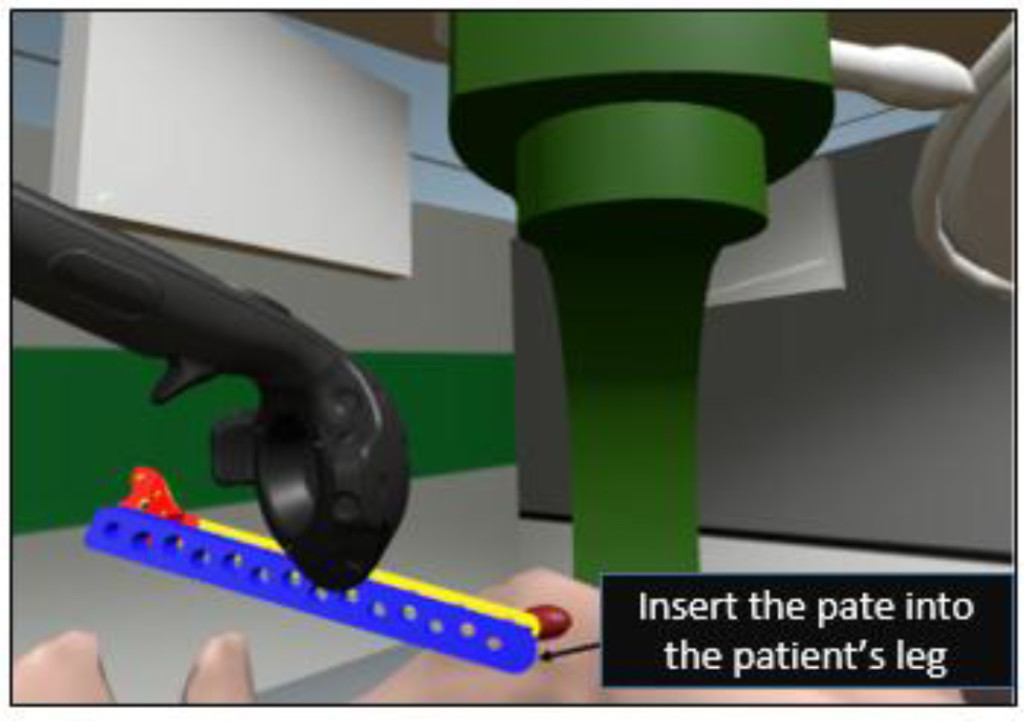

An innovative Immersive VR (IVR) simulator has been developed using the HTC Vive system for both LISS plating and Condylar plating surgical procedures [7]. This Vive based simulator system consists of a fully immersive headset, pair of wireless handheld controllers and two tracking cameras (figure 3). Cameras are used to track the position of the user in the virtual environment. The Vive headset has a field of view of 110˚ which increases the level of immersion allowing users to interact with the environment more naturally. The two wireless handheld controllers allow users to freely explore and interact with the virtual environment. In the context of LISS plating surgery (for example), users (or residents) can perform various surgical tasks such as assembly, screw insertion and tightening, drilling among others using the controllers. A view of a user interacting with the virtual environment wearing the Vive headset and using the controllers can be seen in figure 3. This IVR simulator was also developed using the Unity 3D game simulation engine C# and JavaScript were used to program the simulation environments. Steam VR toolkit and a third party VR toolkit were used to build this simulator. The major modules of the IVR simulator include a VR manager, Simulation Training manager and User Interface Manager. The Simulation Training manager coordinates all the interactions between the simulator and the users. The User Interface manager coordinates the input from the users and transmits it to the required training environment. The VR manager module serves as a bridge between Vive plugins and the Unity based simulation (graphical) environment. In figure 4, a view of the Condylar plate assembly environment for the IVR simulator is shown.

Figure 3. A user interacting with the IVR simulator

Figure 4. A view of Plate Insertion Training Environment (one of the six training environments developed for IVR condylar plating simulator)

Mixed Reality based Platforms

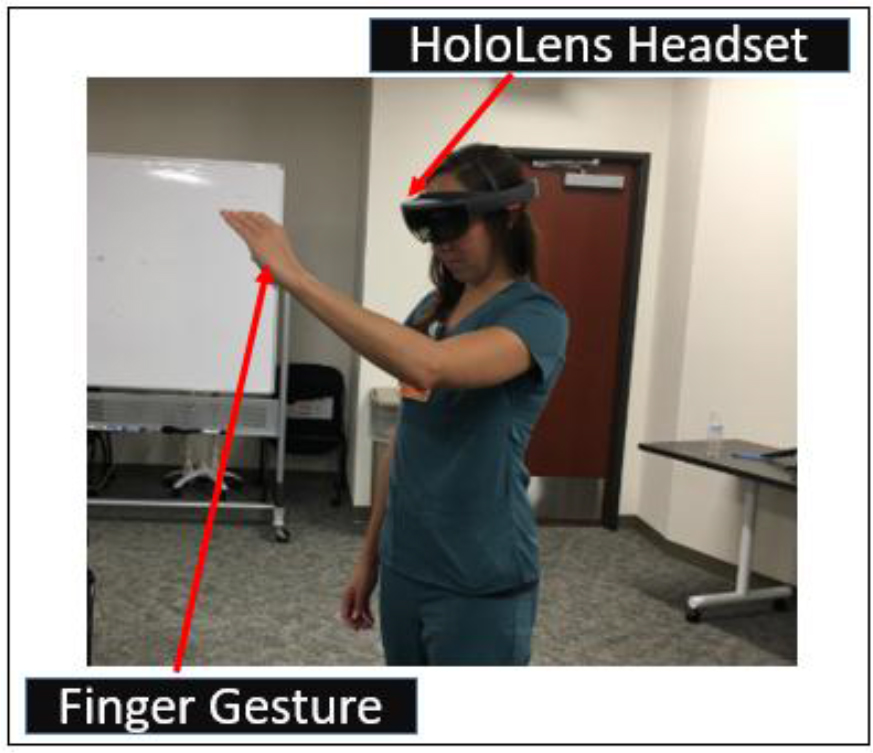

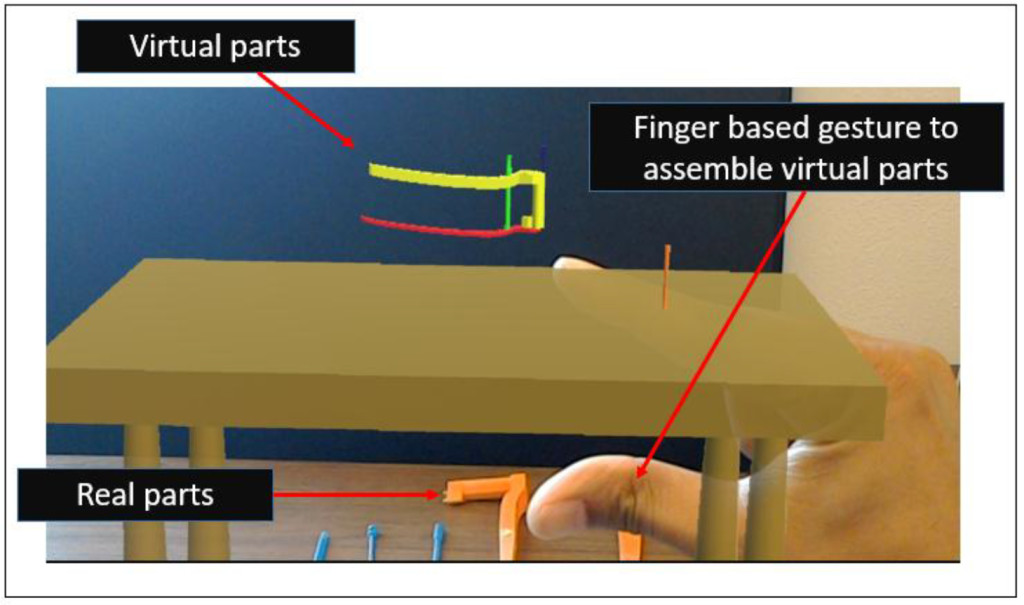

The Mixed Reality (MR) platform typically allows users to interact with both the real and virtual worlds at the same time; the primary advantage of such an MR platform is that a user can be guided by a simulation scenario to practice specific surgical steps using components in a physical environment; this allows a more integrated training scenario where residents and budding surgeons can practice various steps in a procedure with appropriate guidance from the virtual environment (running on the MR headset). A MR based simulator has been built using the Microsoft HoloLensTM platform and the Unity 3D engine [7, 31] to support training activities in both LISS plating and Condylar plating procedures and developed using the Unity 3D engine. The HoloLens is an untethered and portable MR based device in which user can interact with the virtual environment immersively without losing the sense of the real world environment. In the context of surgical training, the user can interact with virtual surgical objects using the finger based gesture supported by HoloLens and practice the same steps with corresponding physical surgical instruments, plates and other components. A programming toolkit known as the Windows 10 SDK was used during the building of the simulator. A user can be seen interacting with the HoloLens using the finger gesture in the figure 5. In figure 6, a view of the user assembling the LISS plating components using finger gestures can be seen.

Figure 5. A medical resident interacting with the HoloLens using finger gestures

Figure 6. A view of one of the HoloLens’ based training scenarios (to assemble the LISS plate)

Distributed/Web based Platforms

Information centric approaches have gained prominence recently due to the emergence of smart technologies that support web based interactions. However, the need to create such information centric approaches has been underscored by earlier research in manufacturing and engineering; one of the earliest initiatives involved creating an information centric function model to understand the complexities of designing fixtures for use in computer aided manufacturing contexts [33]; other more recent efforts have focused on creating information centric process models to understand the complexities of surgical activities prior to designing and building simulation based training environments in orthopedic surgery [6, 9, 34–38].

Another emerging area revolves around the term ‘Internet of Things (IoT)’, which can play an important role in the development of distributed/web based collaborative framework in engineering, medical and other application contexts. IoT, in general, can be described as a network of physical objects or ‘things’ enhanced with electronics, software and sensors [20]; the ‘things’ refer to sensors and other data exchange or processing devices which are part of a connected network linking cyber and physical resources in various contexts. In the healthcare and medical context, the adoption of IoT based approaches can be useful in reducing costs and improving the health care quality while facilitating use of distributed (and remote) software and physical resources. The term ‘Internet of Medical Things’ (IoMT) has been proposed by the researchers to refer to IoT for medical applications [21, 22]. However, the term IoMT can lead to misinterpretation as this term is also being used by manufacturing researchers to refer to ‘Internet of Manufacturing Things’ [23, 24]. We propose to use the acronym ‘IoMedT’ to refer to the Internet of Medical Things, which can be defined as an IoT network which links medical devices, software, sensors and other cyber/physical resources through a network to support various activities including tele-medicine, web based surgical training, remote patient monitoring, among others. In the surgical training context, IoMedT based approaches can provide collaborative training to the medial residents and students along with proving crucial patient data online to improve their surgical capabilities. The role of collaborative learning has been underscored by only few researchers in medical education; for such cyber training activities, users and students can interact from different locations through the Internet [25–29]. One such environment is discussed in [30] which outlines a collaborative VR based environment for temporal bone surgery. They used a private gigabit Intranet to send only the modifications in drill positions/forces through the network whereas the model was made available in both locations. Another approach involving collaborative haptic surgical system is described in [25] where users can simulate surgical processes using virtual tools independently in two different locations. The literature shows an evident lack of cloud based training simulators through which medical residents can practice surgical steps remotely and collaboratively.

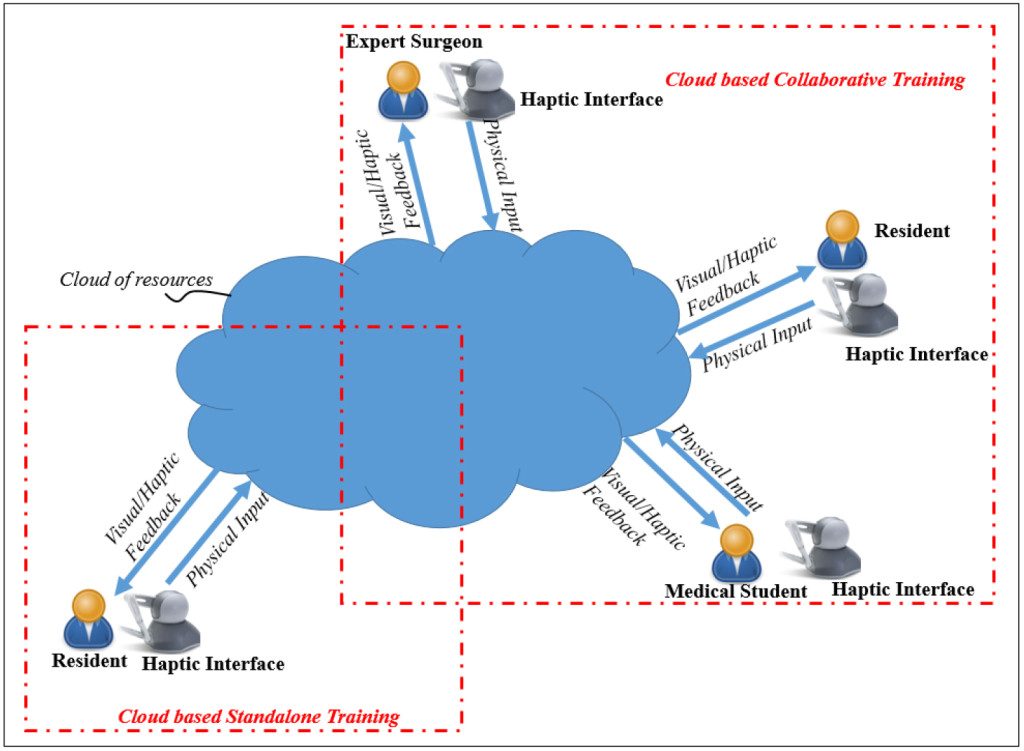

The use of cloud based approaches along with Virtual Reality (VR) based technologies can transform the way medical training is performed. Software Defined Networking (SDN) principles can be used to support such cloud based training approaches which not only reduce the complexity seen in today’s networks, but also help Cloud service providers host millions of virtual networks without the need for common separation/isolation methods [9, 15, 32]. In [15], a cloud based training framework to support haptic interface based training activities in LISS plating surgery has been discussed. Using such framework (figure 7), residents from different locations can interact with an expert surgeon who can guide them or supervise their virtual training activities in a collaborative manner. Initial results on the performance of the network as well as assessment of learning/training [8, 14, 39, 40, 43] have underscored the potential of such distributed approaches based on IoMedT principles.

Figure 7. A Cloud based IoMedT Framework for Surgical Training

Discussion

An important aspect to emphasize is that during the creation of these four VR based simulation platforms, the role of the expert surgeons was important. They were involved with the design, planning and development of the content of the training modules from the beginning; they provided reading materials and surgery videos along with discussing the surgical procedures in detail to ensure the software engineers gained a better understanding of the details involved. They also provided suggestions on improving the user interface and making it user friendly for the students and residents.

The impact of the four platforms supporting VR based training have been assessed through interactions with surgeons and medical residents at Texas Tech University Health Sciences Center, El Paso, Texas [7–9, 15, 39] and the Burrell College of Osteopathic Medicine (BCOM), Las Cruces, Mexico.

Survey based comparisons [6, 7, 14, 40] was conducted involving haptic based, IVR and MR based simulators; the criteria considered included:

Head movement/Comfort: did the users feel comfortable interacting with the training environments using the VR headsets? (Vive and HoloLens)

Field of view: how much of the training scenarios within the simulation environments could be seen when wearing when wearing the HoloLens or Vive device?

Navigation: how easy and intuitive were these platforms to allow users to explore a target training scenario including walking around, changing direction, zooming in on a specific area, etc. using a haptic pen (shown in figure 1), finger gestures in a HoloLens platform (figure 5) or a controller in a Vive platform (figure 3).

Ease of interaction: how easy was it for a user to interact with the training environment using the available user interface options?

Mobility during training: how much mobility did users have while interacting with the training simulator? (could they move freely or was their mobility restricted to a specific position during training)

The IVR simulator platform received a higher score on the first criterion. In terms of the Field of View, both IVR and MR simulators received comparable scores as both allowed a user to turn their heads and interact with the environment. In terms of Navigation, the three platforms (haptic, IVR and MR simulator) received similar scores. For criterion 4 (ease of user interaction), the controllers provided with the IVR simulator and the haptic simulators received a higher score than the MR based simulator (some users had difficulty using the finger gestures to interact with the user interfaces). For criterion 5 (Mobility during user interaction), the MR based simulator received the higher score as it allowed users unhindered movement during training. The IVR simulator had wires connected to the Headset which could potentially hinder or trip users when they were moving and navigating during a training session (the wires can be seen figure 3). The cost of these platforms devices were comparable. In general, the emergence of both the HoloLens and Vive based platforms provide a low cost alternative to the more traditional expensive VR environments such as the VR CAVE or Powerwall.

Conclusion

In this paper, a discussion of four simulator platforms for training medical residents in orthopedic surgery has been discussed. The four platforms discussed were haptic based, immersive, mixed reality based and distributed/web based platforms. These simulators were built to provide residents training in two orthopedic surgical procedures (LISS plating and condylar plating) to address fractures of the femur bone. A comparison of their interactive capabilities along with results from studies assessing their impact on education and training was also provided. The results from the learning interactions were predominantly positive and the comparison studies revealed that the low cost emerging platforms have the potential to be incorporated into medical training activities.

Acknowledgment

We would like to express our thanks to the surgeons, residents and students at the Paul L. Foster School of in El Paso (Texas) and Burrell College of Osteopathic Medicine (BCOM), Las Cruces, Mexico for participating in some of these project activities.

Competing interests

The author(s) declare(s) that they have no competing interests.

Funding Information

This material is based upon work supported by the National Science Foundation [under grant number CNS 1257803].

References

- Sørensen, T. S., Therkildsen, S. V., Makowski, P., Knudsen, J. L., & Pedersen, E. M. (2001). A new virtual reality approach for planning of cardiac interventions. Artificial Intelligence in Medicine, 22(3), 193–214.

- M. Peters, C. A. Linte, J. More, D. Bainbridge, D. L. Jones, G. M. Guiraudon (2008). Towards a medical virtual reality environment for minimally invasive cardiac surgery. In Miar 2008, Lncs 5128, pp. 1–11

- Azzie, JT. Gerstle, A. Nasr, D. Lasko, J. Green, O. Henao, M. Farcas, A. Okrainec (2011) Development and validation of a pediatric laparoscopic surgery simulator. In Journal of Pediatric Surgery, vol. 46, pp. 897–903 [Crossref]

- Makiyama, M. Nagasaka, T. Inuiya, K. Takanami, M. Ogata, Y. Kubota (2012) Development of a patient-specific simulator for laparoscopic renal surgery. In International Journal of Urology 19, pp. 829–835 [Crossref]

- Pettersson, J., Palmerius, K. L., Knutsson, H., Wahlstrom, O., Tillander, B., & Borga, M. (2008). Simulation of patient specific cervical hip fracture surgery with a volume haptic interface. IEEE Transactions on Biomedical Engineering, 55(4), 1255–1265.

- Cecil, J., Gupta, A., & Pirela-Cruz, M. (2018). An advanced simulator for orthopedic surgical training. International journal of computer assisted radiology and surgery, 13(2), 305–319. [Crossref]

- Gupta, A., Cecil, J., & Pirela-Cruz, M. (2018, August). A Virtual Reality enhanced Cyber Physical Framework to support Simulation based Training of Orthopedic Surgical Procedures. In 2018 14th IEEE Conference on Automation Science and Engineering (CASE). IEEE.

- Cecil, J., Gupta, A., Pirela-Cruz, M., & Ramanathan, P. (2017). A cyber training framework for orthopedic surgery. Cogent Medicine, 4(1), 1419792.

- Cecil, J., Kumar, M. B. R., Gupta, A., Pirela-Cruz, M., Chan-Tin, E., & Yu, J. (2016, October). Development of a virtual reality based simulation environment for orthopedic surgical training. In OTM Confederated International Conferences” On the Move to Meaningful Internet Systems” (pp. 206–214). Springer, Cham.

- Ming-Dar Tsai, Ming-Shium Hsieh, Chiung-Hsin Tsai (2007) Bone drilling haptic interaction for orthopedic surgical simulator. In Computers in Biology and Medicine, vol. 37, pp. 1709 – 1718 [Crossref]

- Lin, Y., Wang, X., Wu, F., Chen, X., Wang, C., & Shen, G. (2014). Development and validation of a surgical training simulator with haptic feedback for learning bone-sawing skill. Journal of biomedical informatics, 48, 122–129. [Crossref]

- Ming-Dar Tsai, Ming-Shium Hsieh, Shyan-Bin Jou (2001) Virtual reality orthopedic surgery simulator. In Computers in Biology and Medicine, vol. 31, pp. 333–351 [Crossref]

- J.W. Park, J. Choi, Y. Park, K. Sun (2011) Haptic Virtual Fixture for Robotic Cardiac Catheter Navigation. In Artificial Organs, ISSN 0160-564X, Vol. 35, Issue 11, pp. 1127–1131 [Crossref]

- Cecil, J., Gupta, A., Pirela-Cruz, M., & Ramanathan, P. (2018). A Network-Based Virtual Reality Simulation Training Approach for Orthopedic Surgery. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 14(3), 77.

- Cecil, J., Gupta, A., Ramanathan, P., & Pirela-Cruz, M. (2017, April). A distributed collaborative simulation environment for orthopedic surgical training. In 2017 Annual IEEE International Systems Conference (SysCon) (pp. 1–8). IEEE.

- Suresh, P. and Schulze, J.P., 2017. Oculus Rift with Stereo Camera for Augmented Reality Medical Intubation Training. Electronic Imaging, 2017(3), pp.5–10.

- Egger, J., Gall, M., Wallner, J., Boechat, P., Hann, A., Li, X., Chen, X. and Schmalstieg, D., 2017, March. Integration of the HTC Vive into the medical platform MeVisLab. In SPIE Medical Imaging (pp.

- Huber, T., Paschold, M., Hansen, C., Wunderling, T., Lang, H. and Kneist, W., 2017. New dimensions in surgical training: immersive virtual reality laparoscopic simulation exhilarates surgical staff. Surgical Endoscopy, pp.1–6. [Crossref]

- Harrington, C. M., Kavanagh, D. O., Quinlan, J. F., Ryan, D., Dicker, P., O’Keeffe, D., & Tierney, S. (2018). Development and evaluation of a trauma decision-making simulator in Oculus virtual reality. The American Journal of Surgery, 215(1), 42–47. [Crossref]

- http://www.techopedia.com/definition/28247/internet-of-things-iot

- https://www.healthcaretechnologies.com/the-internet-of-medical-things-internet-of-things-health

- Jha, N. K. (2017, May). Internet-of-medical-things. In Proceedings of the on Great Lakes Symposium on VLSI 2017 (pp. 7–7). ACM.

- Zhang, Y., Zhang, G., Wang, J., Sun, S., Si, S., & Yang, T. (2015). Real-time information capturing and integration framework of the internet of manufacturing things. International Journal of Computer Integrated Manufacturing, 28(8), 811–822.

- Cutler, T. R. (2014). The internet of manufacturing things. Ind. Eng, 46(8), 37–41.

- Chebbi, B., Lazaroff, D., Bogsany, F., Liu, P. X., Ni, L., & Rossi, M. (2005, July). Design and implementation of a collaborative virtual haptic surgical training system. In IEEE International Conference Mechatronics and Automation, 2005 (Vol. 1, pp. 315–320). IEEE.

- Paiva, P. V., Machado, L. D. S., Valença, A. M. G., De Moraes, R. M., & Batista, T. V. (2016, June). Enhancing Collaboration on a Cloud-Based CVE for Supporting Surgical Education. In 2016 XVIII Symposium on Virtual and Augmented Reality (SVR) (pp. 29–36). IEEE.

- Tang, S. W., Chong, K. L., Qin, J., Chui, Y. P., Ho, S. S. M., & Heng, P. A. (2007, March). ECiSS: A middleware based development framework for enhancing collaboration in surgical simulation. In Integration Technology, 2007. ICIT’07. IEEE International Conference on (pp. 15–20). IEEE.

- Jay, C., Glencross, M., & Hubbold, R. (2007). Modeling the effects of delayed haptic and visual feedback in a collaborative virtual environment. ACM Transactions on Computer-Human Interaction (TOCHI), 14(2), 8.

- Acosta, E., & Liu, A. (2007, March). Real-time interactions and synchronization of voxel-based collaborative virtual environments. In 2007 IEEE Symposium on 3D User Interfaces. IEEE.

- Morris, D., Sewell, C., Blevins, N., Barbagli, F., & Salisbury, K. (2004, September). A collaborative virtual environment for the simulation of temporal bone surgery. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 319–327). Springer Berlin Heidelberg.

- Cecil, J., Gupta, A., & Pirela-Cruz, M. (2018, April). Design of VR based orthopedic simulation environments using emerging technologies. In 2018 Annual IEEE International Systems Conference (SysCon) (pp. 1–7). IEEE.

- Cecil, J., Cecil-Xavier, A., & Gupta, A. (2017, August). Foundational elements of next generation cyber physical and IoT frameworks for distributed collaboration. In 2017 13th IEEE Conference on Automation Science and Engineering (CASE) (pp. 789–794). IEEE.

- Cecil, J., (2002) A Functional Model of Fixture Design to aid in the design and development of automated Fixture Design systems’, Journal of Manufacturing Systems, Vol. 21, No.1, pp.58–72

- Cecil, J. and Cruz, M., (2015) An Information Centric framework for creating virtual environments to support Micro Surgery, International Journal of Virtual Reality, Vol. 15, No. 2, pp. 3–18.

- Cecil, J., and Cruz, M., (2011) An Information Model based framework for Virtual Surgery, International Journal of Virtual Reality, International Journal of Virtual Reality, Vol 10 (2), pp. 17–31

- Cecil, J., Pirela-Cruz, M., (2013) An information model for designing virtual environments for orthopedic surgery, Proceedings of the 2013 EI2N workshop, OTM Workshops, Graz, Austria. Vol 8186, Lecture Notes in Computer Science, page 218–227. Springer,

- Cecil, J., Pirela-Cruz, M., Information Model for a Virtual Surgical Environment, presentation at the 2010 Industrial Engineering Research Conference, Cancun, Mexico, June 5 -9, 2010

- Cecil, J., Pirela-Cruz, M., Development of an Information Model for Virtual Surgical Environments, Proceedings of the 2010 Tools and Methods in Competitive Engineering, Ancona, Italy, April 12–15, 2010.

- Cecil, J., Gupta, A., Pirela-Cruz, M., & Ramanathan, P. (2018). An IoMT-based cyber training framework for orthopedic surgery using next generation internet technologies. Informatics in Medicine Unlocked.

- Cecil, J., Gupta, A., Pirela-Cruz, M., The Design of IoT based smart simulation environments for orthopedic surgical training, accepted for presentation and publication in the proceedings of the Twelfth International Symposium on Tools and Methods of Competitive Engineering (TMCE 2018),Las Palmas de Gran Canaria, Gran Canaria, Spain, May 7 – 11 2018.

- Cecil, J., Gupta, A., Pirela-Cruz, M., Design of a Virtual Reality Based Simulation Environment and its Impact on Surgical Education, 2016 International Conference of Education, Research and Innovation (ICERI), Seville, Spain, Nov 14–16, 2016.

- Cecil, J., P. Ramanathan. M. Cruz, and Raj Kumar, M.B., A Virtual Reality environment for orthopedic surgery, Proceedings of the 2014 EI2N workshop, Amantea, Calabria, Italy, Oct 29–30.

- Cecil, J., Ramanathan, P., Prakash, A., Pirela-Cruz, M., et al., Collaborative Virtual Environments for Orthopedic Surgery, Proceedings of the 9th annual IEEE International Conference on Automation Science and Engineering (IEEE CASE 2013), August 17 to 21, 2013, Madison, WI.